Queues are very common in computer science and in real-world programs. A queue is a FIFO data structure where new elements are typically added to the rear position whereas the first items inserted will be the first ones to be removed/ served. A nice, thorough collection of queueing systems can be found on queues.io. Task/Job queues in particular are used by numerous systems/ services and they apply to a wide range of applications. They can alleviate the complexity of system design and implementation, boost scalability and generally have many advantages. Some of the most popular ones are Celery, RQ (Redis Queue) and Gearman.

The one we recently stumbled upon and immediately took advantage of was Celery which is written in Python and is based on distributed message passing. It focuses on real-time operation but supports scheduling as well. The units of work to be performed, called tasks, are executed simultaneously on a single or more worker servers using multiprocessing. Thus, concurrent workers run in the background waiting for new job arrivals and when a task arrives (and its turn comes) a worker processes it. Some of Celery's uses are handling long running jobs, asynchronous task processing, offloading heavy tasks, job routing, task trees, etc.

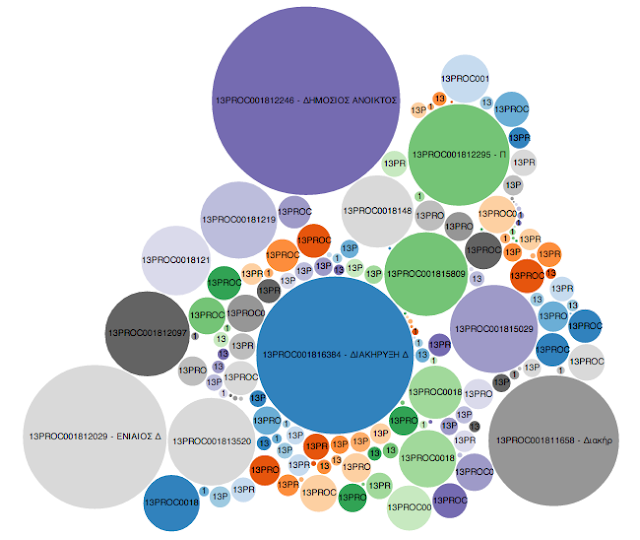

Now let's see a use case where Celery untied our hands. As you might already know, for quite some time we have been developing Python, Selenium-based scripts for web scraping and browser automation. So, occasionally we came across data records/ cases, while executing a script, that had to be dealt separately by another process/ script. For instance, in the context of the recent e-procurement project, when scraping through DEiXToBot the detail page of a payment (published on the Greek e-procurement platform) you could find a reference towards a relevant contract which you would also like to download and scrape. Additionally, this contract could also link with a tender notice and the latter may be a corrected version of an existing tender or connect in turn with another decision/ document.The one we recently stumbled upon and immediately took advantage of was Celery which is written in Python and is based on distributed message passing. It focuses on real-time operation but supports scheduling as well. The units of work to be performed, called tasks, are executed simultaneously on a single or more worker servers using multiprocessing. Thus, concurrent workers run in the background waiting for new job arrivals and when a task arrives (and its turn comes) a worker processes it. Some of Celery's uses are handling long running jobs, asynchronous task processing, offloading heavy tasks, job routing, task trees, etc.

Thus, we thought it would be handy and more convenient if we could add the unique codes/ identifiers of these extra documents to a queue system and let a background worker get the job done asynchronously. It should be noted that the lack of persistent links on the eprocurement website made it harder to download a detail page programmatically at a later stage since you could access it only after performing a new search with its ID and automating a series of steps with Selenium depending on the type of the target document.

So, it was not long before we installed Celery on our Linux server and started experimenting with it. We were amazed with its simplicity and efficiency. We quickly wrote a script that fitted the bill for the e-procurement scenario we described in the previous paragraph. The code we wrote provided an elegant and practical solution to the problem at hand and was something like that (note the recursion!):

from celery import Celery

app = Celery('tasks', backend='amqp', broker='amqp://')

@app.task

def download(id):

... selenium_stuff ...

if (reference_found)

download.delay(new_id) # delay sends a task message

In conclusion, we are happy to have found Celery, it's really promising and we thought it would be nice to share this news with you. We are looking forward to using Celery further for our heavy scraping needs and we are glad that we added it to our arsenal.